Introduction

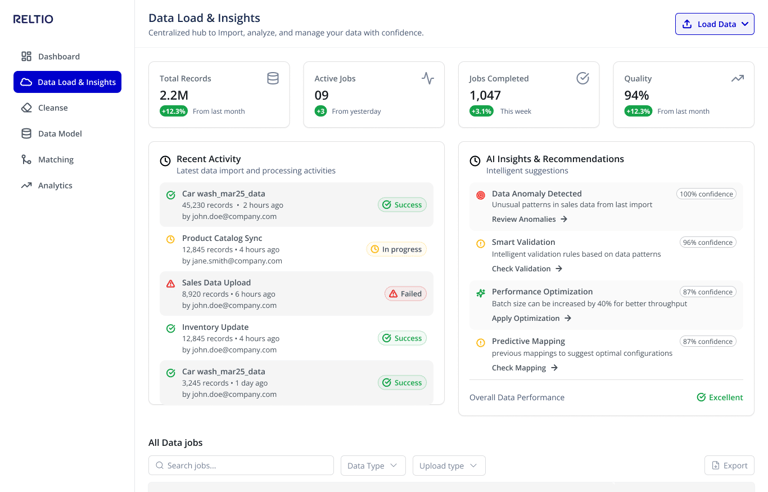

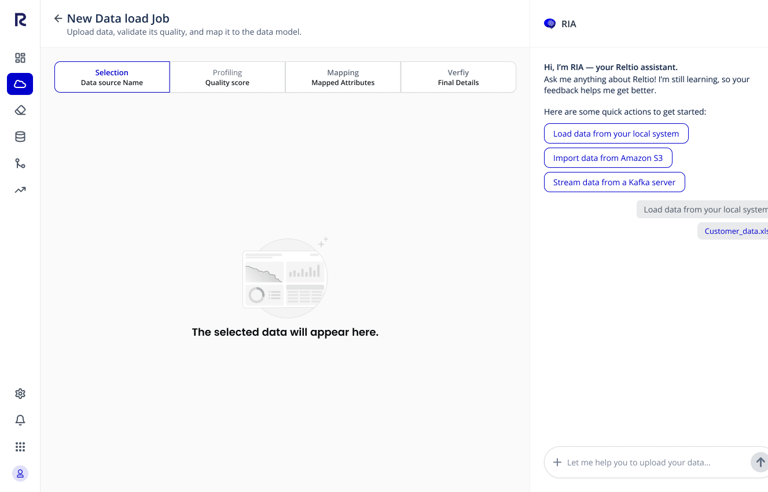

The Universal Data Loader (UDL) is the critical gateway for enterprise users to import data into our platform. However, the legacy experience was a significant productivity drain: it relied on repetitive, manual inputs housed within a static, non-informative UI.

As the Lead Product Designer, my primary objective was to integrate an AI-driven workflow to automate the heavy lifting. I aimed to shift the user paradigm from "Manual Data Entry" to "Intelligent Review," drastically reducing the time and effort required to upload complex datasets.

Where Data Meets Decisions

?

The Challenge

For Data Engineers and Business Analysts, data ingestion is high stakes—one incorrect mapping can corrupt downstream analytics. The legacy tool didn’t respect this reality.

The "Data Paralysis" Cycle

Cognitive Overload:

Users manually mapped 50+ columns for every upload, even for recurring jobs. No semantic

understanding. No memory. The system outsourced schema knowledge to the user.

Black-Box Anxiety:

If a 10K-row file contained a single error, the system failed after processing—offering no visibility

into “why.”

Operational Inefficiency:

Recurring tasks stretched into hour-long sessions. Abandonment hit 40%.

The workflow wasn’t just slow—it undermined user confidence.

Strategic Goal

To move from a Manual Input Model →→ to an AI-Assisted Workflow.

Old Way: User does 100% of the work. System executes.

New Way: AI does 90% of the work. User reviews the remaining 10%.

"

Project Details

Duration

8 Weeks

My Role

Research & Design

"

Discovery & Research Strategy

01 Quantitative Validation {Survey data}

I launched an in-app survey to 313 active users to gauge baseline satisfaction.

Method: In-app intercept survey (N=33 responses).

Key Metric: The tool had a CSAT score of just 3.2/5.

The Finding: 35% mentioned “repetitive manual work” as their top frustration. Users didn’t dislike the product—

they disliked the process of onboarding data.

02 Mining Customer Signals {User Feedback}

I analyzed 84 user-submitted ideas from our product feedback tool (Aha!) and reviewed 12 months of support tickets.

Method: Thematic clustering using spreadsheet analysis.

The Insight: Users were explicitly asking for automation.

"Why can’t it suggest mappings automatically?"

"I upload the same file every week; why do I have to map 50 columns again?"

Result: This confirmed that Auto-Mapping wasn't just a "nice-to-have"—it was a critical feature.

To justify the investment in AI and automation, I needed to prove that "Manual Effort" was the primary cause of churn. I triangulated data from three specific sources:

03 Competitive Benchmarking {Competitor Data}

I analyzed the onboarding flows of Informatica, Salesforce Data Cloud, and HubSpot.

The Gap: Competitors offered "Wizard-style" flows with inline validation. We were the only platform relying on a "Black Box" upload (no feedback, no validation).

The Opportunity: While competitors offered complex tools for IT pros, we identified an opening to build a "Smart Loader" for business users by leveraging AI to hide the complexity.

"

The Problem Definition

Synthesizing this research, I defined two core friction points:

Cognitive Overload: The system was semantically unaware. Users had to manually map 50+ columns to schema fields for every upload, even for recurring jobs. This forced users to memorize the database schema, shifting technical debt onto the user.

The "Black Box" Anxiety: The UI offered no feedback. If a 10,000-row file had one error, the system would fail silently after processing.

Operational Inefficiency: Simple data updates turned into hour-long tasks, leading to a 40% abandonment rate.

"

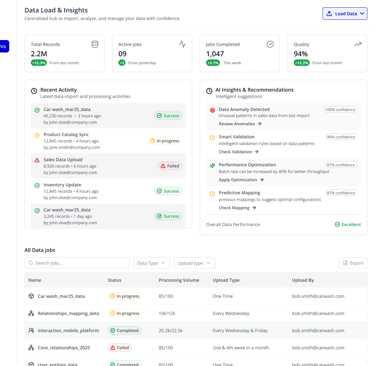

Solution: The AI-Integrated Workflow

I designed a two-pronged approach to address the friction points identified in discovery.

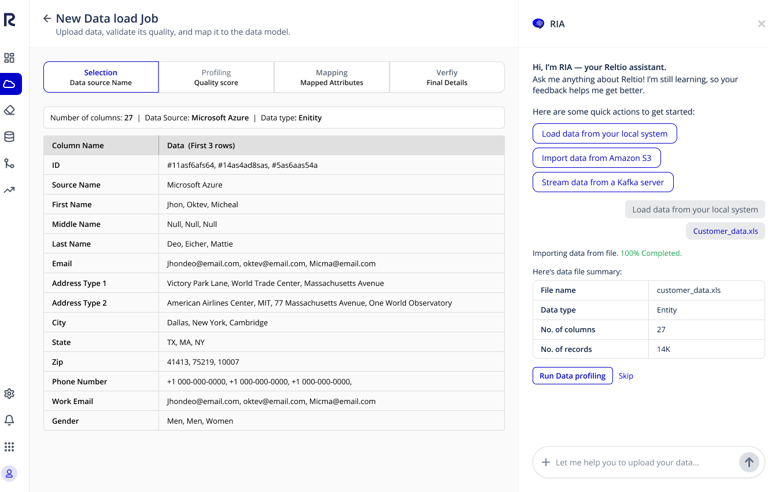

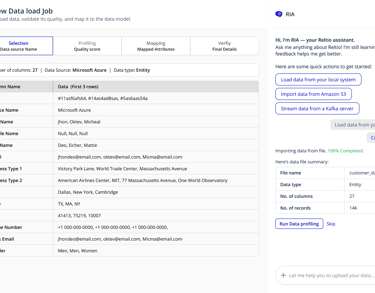

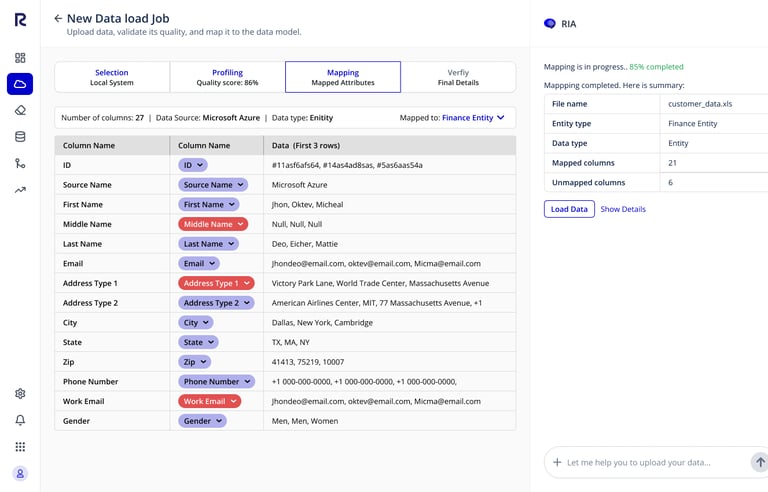

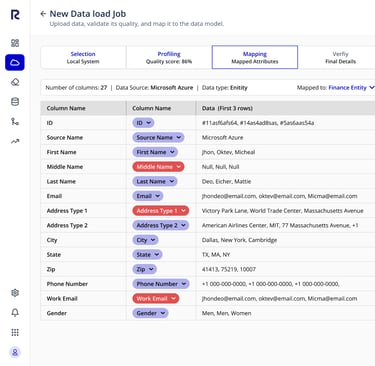

Feature 1: Heuristic Schema Matching (Solving Repetition)

I designed a pattern-matching engine that analyzes file headers and data types (e.g., recognizing specific regex patterns for "Phone Number" or "SKU").

The UX Shift: Instead of confronting an empty form, the user enters a Pre-Filled Mapping Screen.

Impact: The user's cognitive load shifts from "Recall" (remembering field names) to "Recognition" (verifying the AI's suggestions).

Feature 2: In-Context Remediation (Solving the "Black Box")

To fix the "Silent Failure" issue, I introduced a Triage Dashboard.

The Logic: The system pre-scans the first 50 rows immediately. If anomalies (e.g., Date Format mismatch) are detected, they are flagged before the import begins.

The Interaction: Users can correct data cells directly in the browser (Excel-style interaction), preventing the need to abort, fix the file offline, and re-upload.

{Wireframes Iteration 01}

"

06 — Designing for Trust

Even with accurate AI, usability testing uncovered a major blocker:

Users hesitated to click “Confirm.”

They feared invisible automation:

“How do I know the AI didn’t mix up ‘Created Date’ with ‘Modified Date’?”

The Iteration — Making the AI a “Glass Box”

To close the trust gap, I redesigned the review layer to expose the system’s decisions.

Confidence Scoring:

Green: Exact match, high confidence

Yellow: Fuzzy match → needs human review

Review-by-Exception:

A toggle to “Show only low-confidence mappings” let users focus on the 5 questionable fields instead

of the 45 correct ones.

Result:

This small UX detail became the adoption breakthrough.

Users felt in control—supervised automation instead of blind automation.

"

Outcomes & Impact

⏱ 80% reduction in time-to-import for recurring datasets

📈 First-time success rate: 50% → 85%

📉 40% drop in data-import-related support tickets

Beyond the metrics, the workflow fundamentally changed the perception of the tool—from tedious and error-prone to intelligent, predictable, and trustworthy.

The ‘Why’ Behind the Work

This wasn’t just about usability—it was about trust, confidence, and time.

By connecting directly with users and benchmarking real-world standards, I am turning UDL into a tool users want to use—not just have to use.

In the end, it’s about making complex things feel simple. That’s the magic of good UX.